Article

Robot Perception and Navigation in Challenging Environments

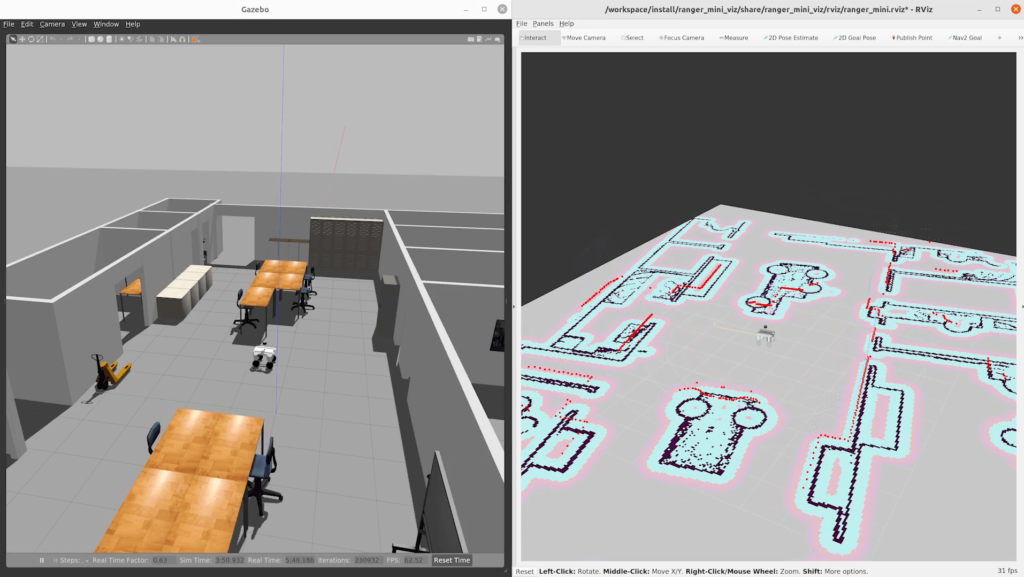

Sophisticated robot perception is essential for robotic navigation in indoor settings (shipping container facilities at ports, office buildings, etc.) as navigation is nuanced in terms of the task at hand and the environment. In many cases, it requires customized machine learning algorithms, such as deep neural networks for perception and SLAM for localization and mapping, to address the challenges.

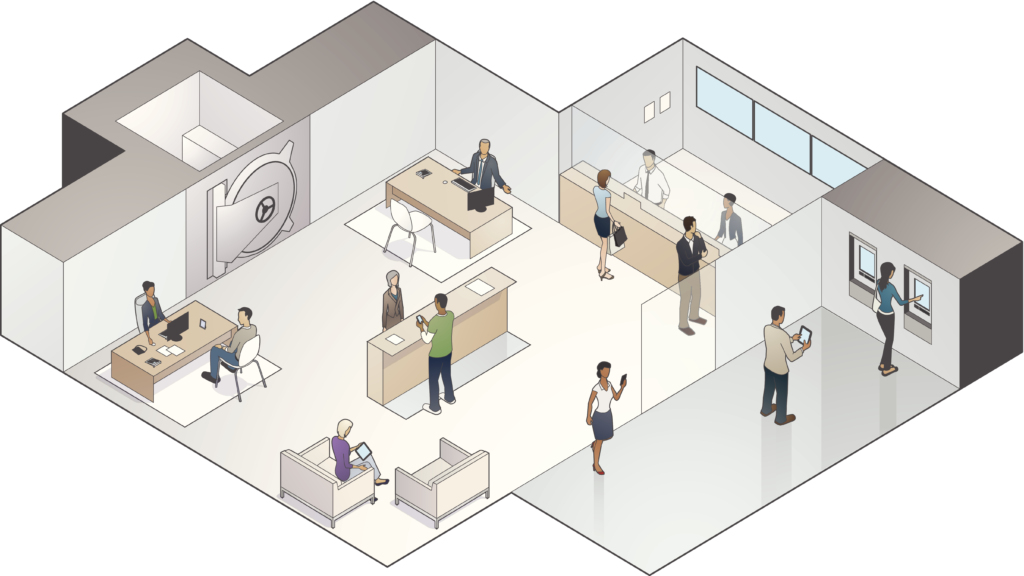

Robotic navigation goes beyond steering mechanisms like swerve drives, and it can easily get even more challenging when dealing with an uncontrolled indoor environment that has moving obstacles, if new objects are introduced into the environment, and if the robots have to be operated autonomously alongside humans.

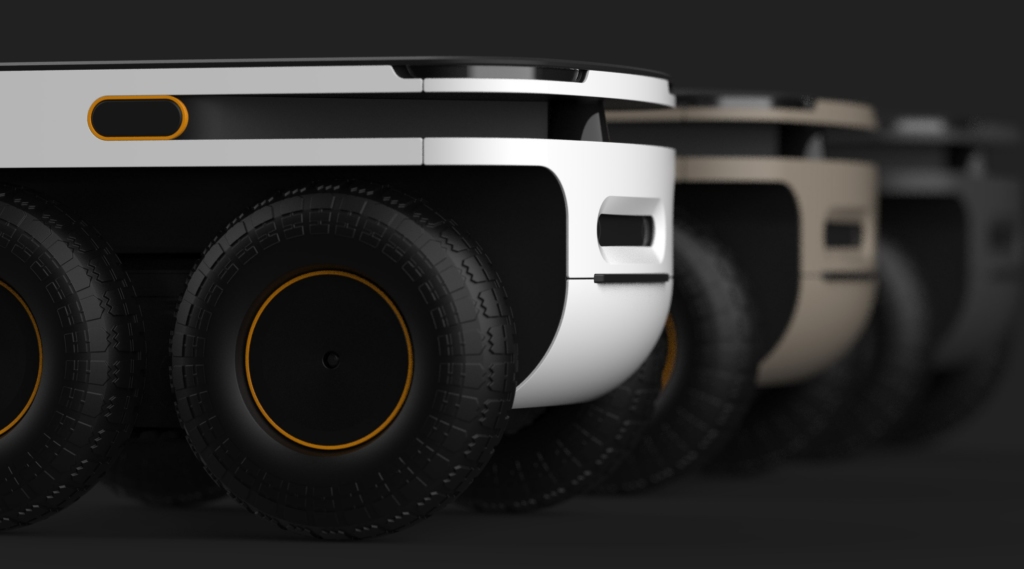

In a controlled environment setting such as a factory or a warehouse, an AMR (Autonomous Mobile Robot) can navigate along specific planned routes with a minimal sensor stack. But in an office space or a busy site such as a maritime port, the configurations change, and there are no dedicated lanes that are specifically laid out for robot navigation. Therefore, an AMR has to rely on a broad array of sensors and the underlying perception algorithms (like object detection and scene segmentation) to process its surroundings, then use these results to guide its path planning and navigation.

Additionally, for the robot to collaborate with a human both in controlled and uncontrolled environments, the robot needs to be able to perceive a human and their relation to itself, such as its ability to differentiate between a human colleague and a guest or its distance to the nearest colleague who is required to help the robot complete a task.

So, perception is a critical prerequisite link in a robot’s perception-action loop for providing scene information to robotic navigation, especially in non-ideal environments where objects, obstacles, and humans are moving or intervening with the robot.

DL (Deep Learning)-based perception

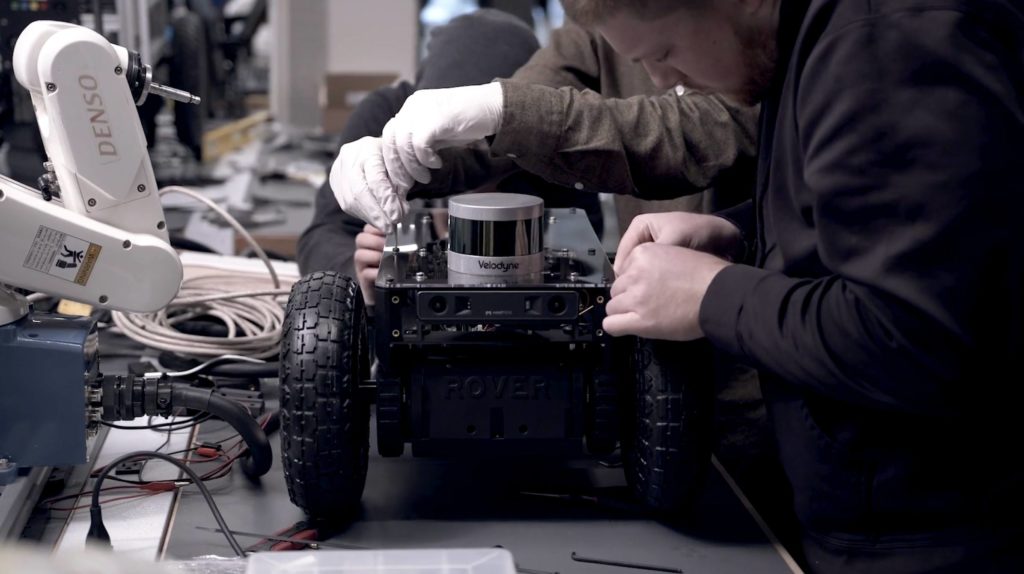

Perception, in general, requires a variety of hardware integrations (LiDAR, RGB and Depth cameras, and various other proximity sensors) supported by the algorithms to make them function in unison. That’s where ML (Machine Learning) comes into play.

Learning-based ML/DL models are one such type of algorithm used in solving perception challenges. A DL model is typically trained by first preparing the data it has to learn and predict from, which involves cleaning and labeling the data correctly. The model is then trained in epochs using an evaluation metric called “Loss Function” to track the error between the true ground truth label and the prediction made by the model as a function of time. The trained model then reaches a stable inference accuracy as the Loss Function converges to a given threshold over the epochs.

Iteration matters in a DL-based perception model training process. The commonly used object detection models are trained in iteration. In the first iteration, the model is trained to learn and predict against some baseline requirements. In the following iterations, the focus is placed on making the model the best for the end application.

A perception use case for assisting robot navigation

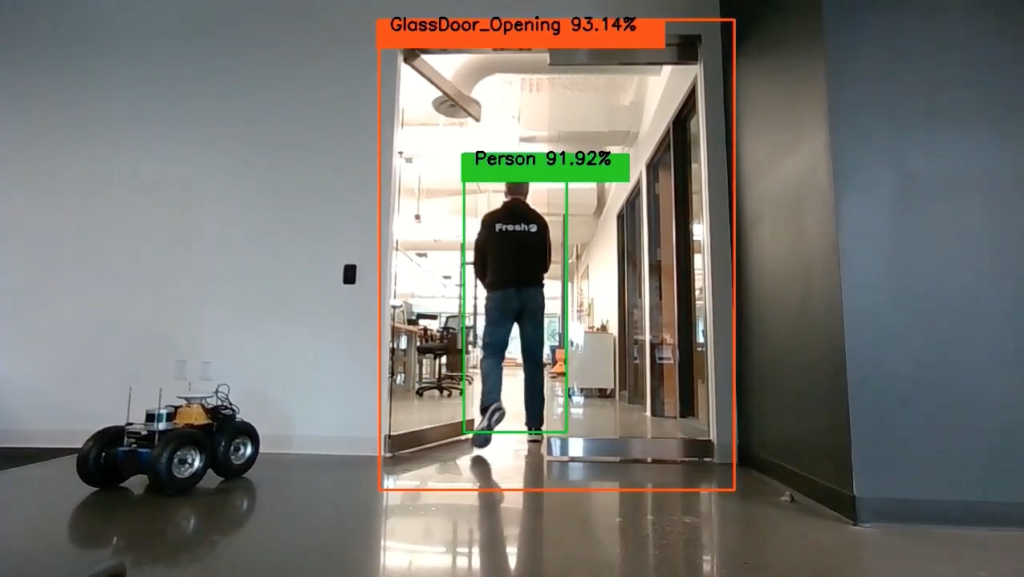

One specific challenge is the door and the door status detection for a robot to navigate in a non-ideal environment such as an office. Good robotic perception functionality is needed for detecting if an office door is open, closed, or semi-open. What makes it more challenging and interesting is if the door is transparent, such as a glass door, with little obvious texture or features for the perception model to learn from.

To assist AMR navigation in an office, we iteratively trained a YOLO object detection model to detect the wooden and glass doors and their open/closed/semi-open status in our building.

In our use case, for the baseline model, we started training the model with a set number of 2D RGB images of each type and status of the doors the robot would encounter during its navigation.

The baseline model was then tested for any improvements if the detections were wrong. So, techniques like data augmentation were implemented. The term data augmentation simply means adding variations in the data that help the model to predict or detect better. There are a couple of caveats when using data augmentation in model training.

- For our use case, adding more images of objects behind the door when the glass door is opened reveals the true features, such as their size and color. And with the glass door closed, the same objects may appear distorted due to the refraction of the light through the glass. Now the model might be able to learn the differences in the observed features with and without the glass, inferring the glass door and its status through an onboard camera image.

- While augmenting the data, one can also make specific crops of images with easily identifiable features like the door handles and joints to make the model perform even better at recognizing glass doors. However, too many data features can create excessive variation in the dataset and stop the model from learning a generalized pattern for differentiating all types of doors with certain reliability. In this case, the model actually overfits the image data it was trained on, which is something we would hope to avoid for a general-purpose door status inference.

There are several implementation considerations when we plug the door detection feature into the robot software pipeline to assist its navigation in a challenging environment.

- The trained deep learning model for the end perception application must perform at the desired speed with the required accuracy. Sometimes the models tend to be too large to perform a speedy inference. For example, in the case of our glass door detection, the trained deep learning model should be able to detect the door and infer the door open or closed/semi-open status and send the inference result to the navigation stack in real time for the robot to respond quickly for a go/no-go decision while navigating through the doorway. The quicker the robot moves, the faster the door status inference speed needs to be.

- The door detection logic should be based on a time-averaged majority vote on the consecutive door status inferences over the image frames in the past couple of seconds to rule out any possible false positives inherited with the inference from DL-based training methods.

- If the robot has an a priori global site map and knows its waypoint location, we can trigger the door detection model inference at a certain waypoint in front of a door for an on-spot door detection to save computational resources on the robot. Otherwise, the door detection model needs to run at all times as a missing door status would mean either the robot knocking down the door or the robot’s failure.

- In the no-go case, when a robot faces a closed/semi-open door, we can design the robot behavior to ask for an operator’s help to open the door.

Other perception approaches to assist robot navigation

It is further possible to restructure the door detection algorithm by fusing multi-modal sensor data (LiDAR, radar, ultrasound, or TOF sensor) that could be on board the robot along with the RGB image data from the camera to improve the model inference performance. This RGB-D information would then provide additional in-depth features for training the models. Though additional complexity is added to the overall process with the data fusion, a reliably performing door detection algorithm can be developed. The developed model also needs to efficiently run on the usually limited compute power on robots. So proper model conversions for the hardware type, tooling and software packaging are focused on for an end-to-end perception pipeline implementation and to also assist in robot navigation.

Some of the other efforts towards that front include, but not limited to, the following practices:

- More focus on data: i.e the data collection, data preparation, labeling and augmentation for model training and algorithm development.

- Suitable MLOps for integrated perception development from data labeling to model training.

- Staying on top of the current advancements in AI learning, specifically related to perception: Self-supervised learning, few shot learning, and meta learning and also add some links on current SLAM methods.

Beyond assisting the robot navigation, the aforementioned approaches and the learnings would also serve as building blocks for establishing a robust perception library for robots that supports typical AMR and general robotics use cases.

Need help with robot perception? Let’s connect.

As a nimble advanced engineering team, we focus on sound design and cost-effective engineering solutions to deliver value to our clients, rather than pursuing first-of-its-kind basic research type of work. Due to continually exercising and optimizing our engineering craft, our robots are becoming smarter in perception and data-driven decision-making to improve their perception-action loops in real-world scenarios, thus helping humans do better jobs daily.

If you are interested in learning more, discussing the topics covered in this post, or partnering on your next project, let’s connect!