Article

Machine Learning UI Starts with Trust

With the increasing amount of data, the ability to detect patterns and surface them in a trustworthy and actionable manner is key to remaining competitive.

Let’s start by defining a couple things first:

UI (User Interface) is the means by which the user and a computer system interact. For the purpose of this article we are referencing on-screen graphics, buttons, and messaging.

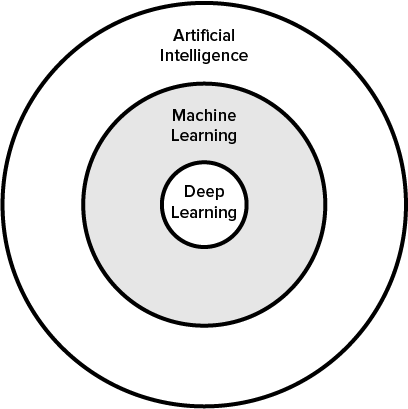

Machine learning is the science of making automated predictions based on patterns and relationships discovered in data. Machine learning is often used interchangeably with Artificial Intelligence. However, machine learning is considered a subset of artificial Intelligence.

Design Considerations

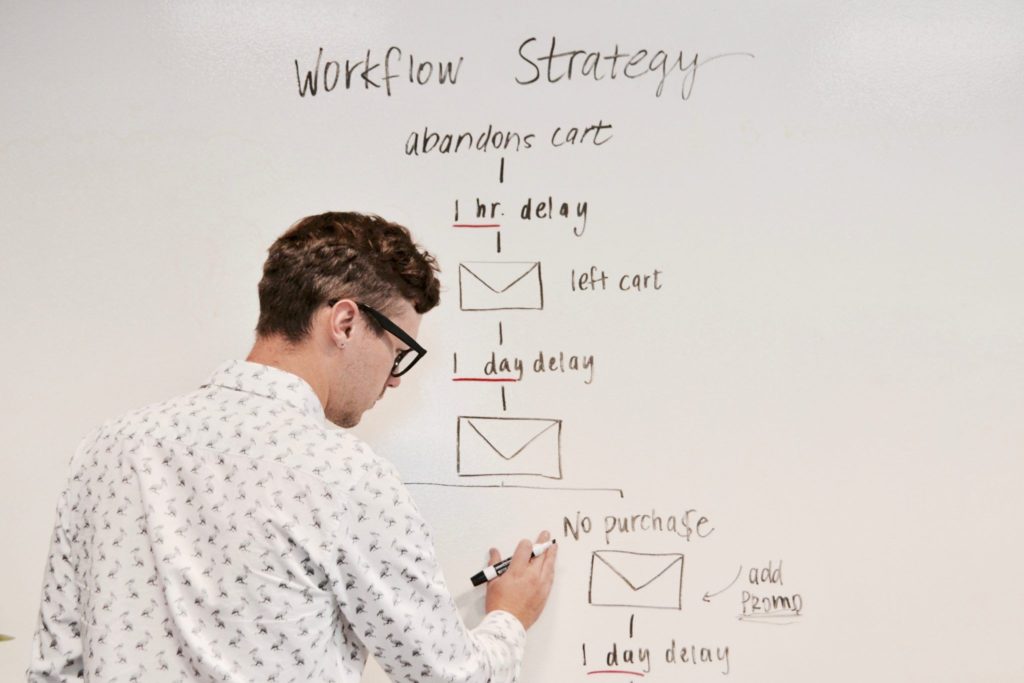

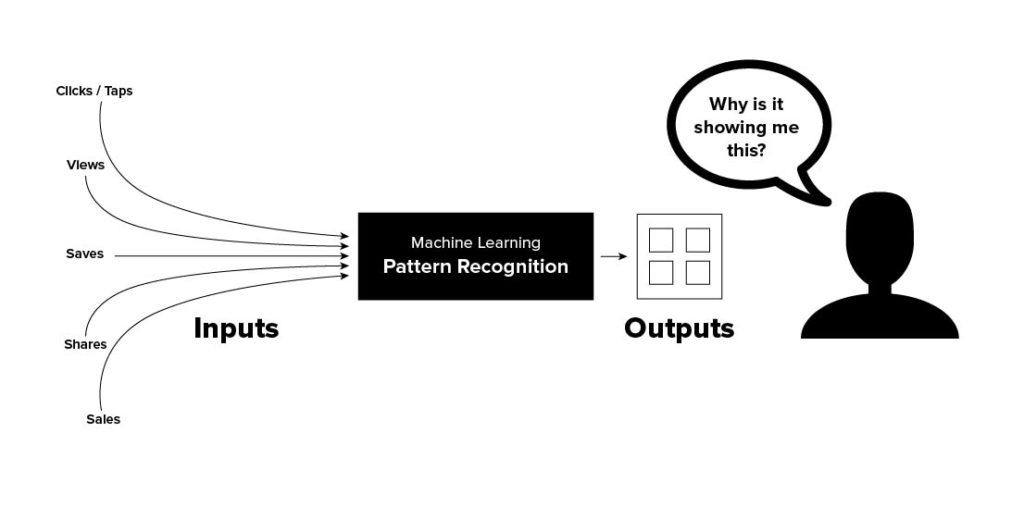

Lack of visibility of how machine learning algorithms match and compare data inputs to provide the data outputs can leave users with the question of why they are viewing specific data output options.

The challenge for designers and developers is to bridge the gap between the input data and the data output in a way that is trustworthy and relevant to the user’s task flow. Ultimately this is done at a global and local level.

Global Transparency

Building user trust starts at the global level. Showing why specific data outputs are displayed encourages further interaction with the specific items in the list. Some familiar interfaces that do a good job of global level transparency are Netflix and Amazon.

Both of these are simple examples of surfacing “more stuff like the stuff I’ve been looking at” in hopes that a user will watch or buy more. By answering the question “Why am I seeing this content?” the user is enticed to explore the individual items.

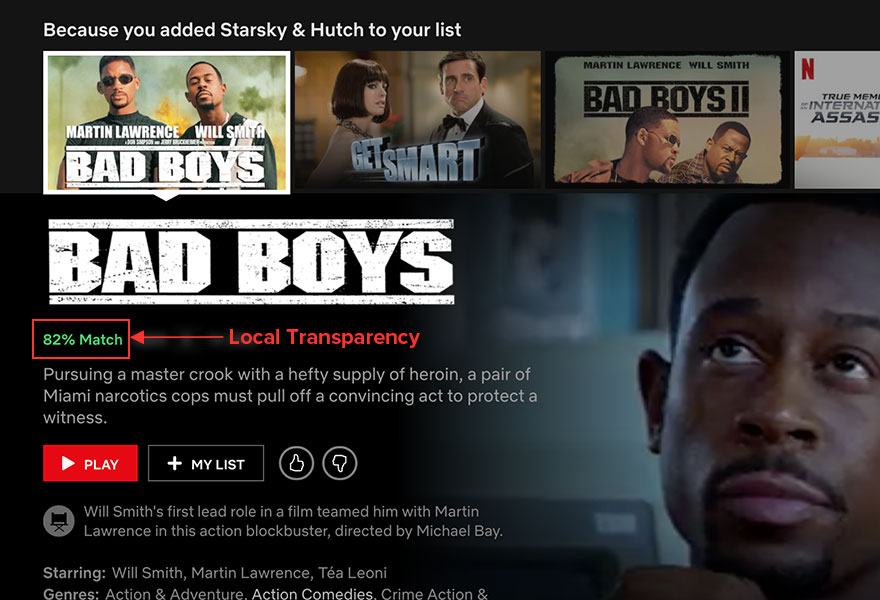

Local Transparency

Sometimes it is necessary for users to have a deeper understanding of why a specific item was part of a list before they make a decision. Local transparency is a way to provide more details on why a specific item appears in a list and how it compares to the other items. The Netflix example below shows the 82% Match of Bad Boys.

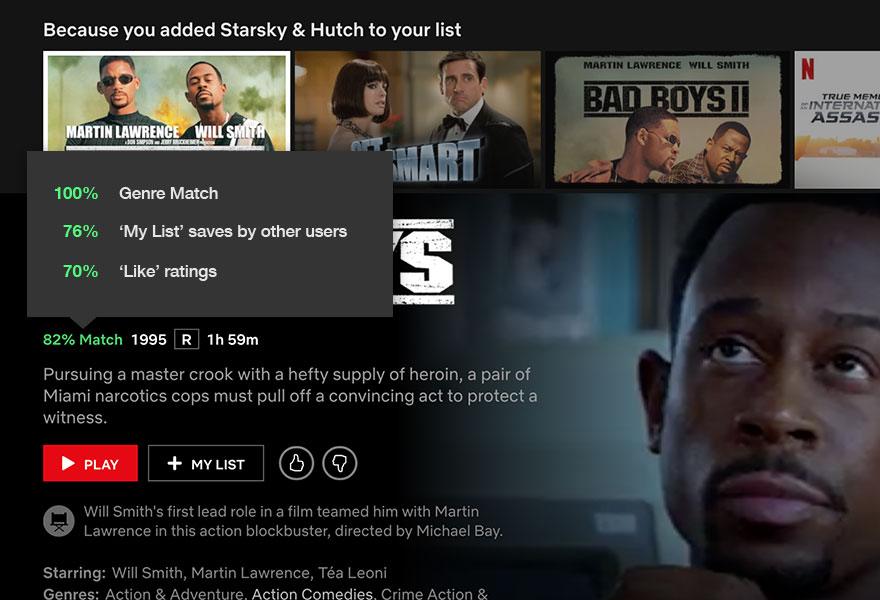

If there was a need to understand the reasoning behind the 82%, Netflix could add a pop-over to lend insights into the algorithms used to define the 82%. The hypothetical design below shows the concept of using progressive disclosure in the form of a pop-over to explain the 82% Match rating.

A deeper transparency of how the algorithm surfaced the specific item can create a deeper trust and understanding for more accurate decisions.

While the Netflix and Amazon examples show machine learning applied in a consumer environment, machine learning algorithms can greatly upskill workers in a corporate environment. Using a combination of machine learning with global and local transparency can greatly cut down on filtering efforts so users can act quicker.

More Resources

Article – The Progression of AI: A Technology for enhancing productivity